Welcome to my blog on algorithms! Today, we’ll dive into the question: Is the Minimax algorithm hard? Get ready to explore this fascinating topic with us!

Understanding the Complexity of Minimax Algorithm: Is It Really That Hard?

The Minimax algorithm is a fundamental technique used in game theory and artificial intelligence, particularly for two-player zero-sum games. It enables computers to make optimal decisions by considering all possible moves and their outcomes. To understand the complexity of the Minimax algorithm, we need to delve into its operation, assessing whether it’s truly difficult or not.

At the heart of the Minimax algorithm is a recursive tree structure that represents all possible game states, with each node of the tree representing a unique game state. The algorithm navigates this tree to determine the best move, based on the assumption that both players will act optimally to either maximize (for the maximizing player) or minimize (for the minimizing player) their scores.

The complexity of the Minimax algorithm is primarily determined by two factors: the branching factor and the search depth. The branching factor describes the average number of legal moves at each game state, while the search depth is the number of moves the algorithm looks ahead to assess potential outcomes. The time complexity of the algorithm can be expressed as O(b^d), where b is the branching factor, and d is the search depth.

One of the main challenges with the Minimax algorithm is its exponential growth in complexity with increased search depth. As the depth increases, the number of nodes that need to be evaluated grows exponentially, leading to significantly longer processing times. This issue is often referred to as the “combinatorial explosion.”

To address this problem, several optimization techniques have been developed, such as Alpha-Beta pruning and Iterative Deepening Depth-First Search (IDDFS). These strategies help to reduce the number of nodes that must be examined, thus improving the efficiency of the Minimax algorithm.

In conclusion, while the Minimax algorithm’s core concept is relatively simple, its complexity can indeed be challenging due to the exponential growth in processing time with increased search depth. However, through the use of optimization techniques, it is possible to manage this complexity and efficiently harness the power of the Minimax algorithm in various game scenarios.

Can You Always Win a Game of Tetris?

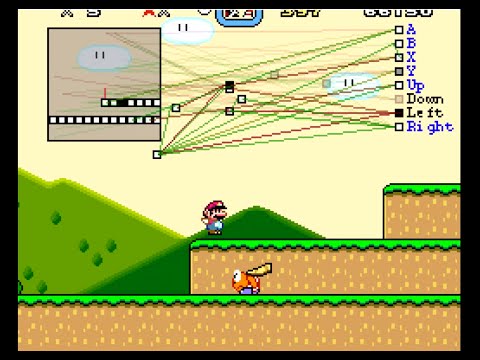

MarI/O – Machine Learning for Video Games

Is it possible for you to defeat the minimax algorithm?

In the context of algorithms, it is unlikely to defeat the minmax algorithm when it is correctly implemented and applied to a suitable problem. The minimax algorithm is designed for turn-based, two-player games with perfect information, such as chess or tic-tac-toe. It considers all possible moves and their outcomes in order to make an optimal decision.

One way to potentially “defeat” the algorithm is by using a more efficient technique, such as alpha-beta pruning, which is an optimized version of the minimax algorithm that reduces the number of nodes to be evaluated. However, this still relies on the underlying principles of minimax and does not actually defeat it.

Another approach to improve performance against minimax is using heuristics to evaluate game states without calculating the entire game tree. This method could potentially outperform minimax if the heuristic is strong enough to provide accurate evaluations quickly. However, it does not guarantee an outright victory against the minimax algorithm.

In summary, while you can potentially optimize or use alternative approaches to perform better against the minimax algorithm, it is not possible to completely defeat it when it’s applied to suitable problems and properly implemented.

What is the complexity of the minimax algorithm?

The complexity of the minimax algorithm depends on the depth of the game tree and the number of nodes at each level. In general, the time complexity of the minimax algorithm is O(b^d), where b is the branching factor (number of child nodes for each node) and d is the depth of the game tree.

The space complexity of the minimax algorithm is O(bd), as it requires storing the game states for each level of recursion. It’s worth noting that this complexity can be reduced by using optimizations like alpha-beta pruning, which helps in cutting down branches of the tree that don’t need to be explored further.

What are the drawbacks of the minimax algorithm?

The minimax algorithm is a widely-used decision-making algorithm in game theory and artificial intelligence, particularly in two-player, zero-sum games. Despite its effectiveness and popularity, there are several drawbacks to the minimax algorithm:

1. Computational Complexity: The minimax algorithm has an exponential time complexity of O(b^d), where b represents the branching factor (average number of possible moves per position) and d is the depth of the game tree. This can result in significant computational overhead, especially for games with large game trees.

2. Scalability Issues: Due to the high computational complexity, the minimax algorithm may not scale well for games with large search spaces or a high branching factor. As a result, it may become impractical to use for complex games, such as Go or chess, without employing additional techniques like alpha-beta pruning or heuristic evaluation functions.

3. Optimality Assumption: The minimax algorithm assumes that both players play optimally at every move. In practice, human players or even AI agents do not always make the best possible moves, which could lead to different outcomes than predicted by the algorithm. This assumption may result in suboptimal strategies when facing non-optimal opponents.

4. Difficulty in Evaluating Positions: Evaluating the “goodness” of a game state is critical for the minimax algorithm. However, it can be challenging to design accurate evaluation functions, particularly for games with numerous factors influencing the overall position. This issue may limit the performance of the minimax algorithm in specific domains.

5. Depth Limitations: Due to the computational constraints, it is often necessary to set a depth limit when searching the game tree. Implementing such a limit may lead to suboptimal or even incorrect decisions, as it prevents the algorithm from fully exploring the entire game tree. Consequently, the algorithm could potentially overlook better moves or strategies.

Rewrite the following question: Is minimax a part of deep learning? Write exclusively in English.

Is minimax an aspect of deep learning within the context of algorithms?

What makes the minimax algorithm difficult to implement and optimize in complex game scenarios?

The minimax algorithm is a popular decision-making technique used in various two-player, turn-based games like chess, tic-tac-toe, and checkers. However, implementing and optimizing the minimax algorithm in complex game scenarios can be quite challenging due to several reasons:

1. Large search space: In complex games, the number of possible moves and configurations increases exponentially as the game proceeds. This results in a vast search space, making it computationally expensive for the algorithm to evaluate all possible outcomes.

2. Computational complexity: Minimax requires evaluating the game tree at different depths to find the best move. As the depth of the search increases, the computational cost grows exponentially, which can make the process slow and inefficient, especially in real-time scenarios.

3. Heuristic Evaluation Function: Finding an accurate heuristic evaluation function for complex games is difficult. An effective evaluation function should quickly estimate the value of a game state without exploring the full game tree. Designing such a function requires deep domain knowledge and may not always generalize well to different situations.

4. Alpha-beta pruning: Although alpha-beta pruning can significantly reduce the search space, its effectiveness largely depends on the order in which moves are explored. If the best moves are not explored early, the algorithm might not benefit from the pruning and will still need to search through many nodes.

5. Memory consumption: The minimax algorithm can consume a large amount of memory when dealing with complex games. This is because the algorithm generates the entire game tree in memory before making a decision. In games with a large branching factor, this can lead to high memory consumption and performance issues.

6. Parallelization: Minimax does not easily lend itself to parallelization, making it harder to take advantage of modern multi-core processors. Although parallel versions of minimax exist, they often involve additional overhead and complexity in managing the synchronization between parallel threads.

Overall, while the minimax algorithm is a powerful tool for simpler games, it faces several challenges when applied to more complex game scenarios. To overcome these limitations, researchers have developed enhancements and alternative algorithms such as Monte Carlo Tree Search, which better handle complex games with large search spaces and high computational demands.

How does the minimax algorithm’s time complexity affect its performance in games with a large search tree?

The minimax algorithm is a decision-making algorithm used in two-player games with perfect information, such as chess, tic-tac-toe, and checkers. It works by exploring the possible moves of both players, assuming that both try to maximize their scores. In this context, time complexity becomes a crucial factor in determining the performance of the algorithm.

The time complexity of the minimax algorithm depends on the branching factor (number of possible moves) and the depth of the search tree (number of levels). In general, it has a complexity of O(b^d), where b is the branching factor and d is the depth of the search tree. As the size of the search tree increases, the time required to compute the optimal move also grows exponentially.

In games with a large search tree, this can lead to significant performance issues, making it impractical for real-time play or even causing it to be impossible to find an optimal move within a reasonable time. This is particularly true for complex games like chess, where the branching factor is around 35 and there can be many levels to explore.

To address this issue, several strategies have been developed to improve the efficiency of the minimax algorithm and make it more suitable for games with large search trees:

1. Alpha-Beta Pruning: This technique reduces the number of explored nodes by “pruning” branches that are not likely to lead to a better solution, effectively skipping them during the search process. This can significantly reduce the time complexity of the algorithm without sacrificing accuracy.

2. Iterative Deepening: Rather than searching to a fixed depth, this method starts with a shallow search and iteratively increases the depth until the optimal move is found or the time limit is exceeded. This provides a good trade-off between search depth and time complexity.

3. Heuristic Evaluation Functions: In some cases, it is possible to estimate the value of a game state without fully exploring the search tree. By using an evaluation function that provides a good approximation, the minimax algorithm can reduce the depth of the search tree while maintaining a reasonable level of accuracy.

4. Parallelization: The minimax algorithm can be parallelized by dividing the search tree among multiple processors or cores, allowing for faster exploration and reduced time complexity.

In conclusion, while the time complexity of the minimax algorithm can negatively impact its performance in games with a large search tree, there are various strategies that can help mitigate this issue and make the algorithm more efficient for complex games.

How can pruning techniques improve the effectiveness of the minimax algorithm in solving hard problems?

In the context of algorithms, pruning techniques can significantly improve the effectiveness of the minimax algorithm in solving hard problems. The minimax algorithm is a decision-making strategy that helps in finding the optimal move in two-player games like chess and tic-tac-toe. It involves recursively exploring all possible game states to determine the best move for a player. However, this can lead to a large number of states and quickly becomes computationally expensive, especially in complex games with many possible moves.

Pruning techniques, such as alpha-beta pruning, come into play by eliminating sections of the game tree that are unnecessary to explore, thereby reducing the computational effort required. Alpha-beta pruning works by keeping track of two values, alpha and beta, which represent the minimum score that the maximizing player is assured of achieving and the maximum score that the minimizing player is assured of achieving, respectively.

During the tree traversal, if the current node’s value is outside of this alpha-beta range, it means that the node will not affect the final result, and thus, there is no need to explore further down that branch of the game tree. In other words, alpha-beta pruning allows us to “prune” branches that we know will not contribute to the outcome, speeding up the minimax algorithm.

Using pruning techniques like alpha-beta pruning can drastically reduce the number of nodes that the minimax algorithm needs to evaluate. This, in turn, leads to faster decision-making and improved ability to handle more complex games or problems with larger search spaces. Moreover, combining the minimax algorithm with pruning techniques also allows developers to set depth limits or other constraints, providing even greater flexibility and control over the search process.

In conclusion, pruning techniques enable the minimax algorithm to solve hard problems more efficiently by selectively exploring relevant branches of the game tree and discarding those that are not necessary. This results in improved performance, faster decision-making, and better overall effectiveness in tackling complex games and large search spaces.