Title: Unraveling the Mystery: What Does Apriori Algorithm Do?

Introduction: The Enigma Behind the Algorithm

Do you ever wonder how some platforms seem to know exactly what you’re looking for, even when you don’t? It may seem like magic, but there’s a secret behind these mind-reading abilities. Let us embark on a fascinating journey to discover the truth behind this mystery, as we reveal the answer to the question: What does Apriori algorithm do?

Understanding the Apriori Algorithm

The Apriori algorithm is one of the most crucial and widely used algorithms in data mining and machine learning. The primary function of the Apriori algorithm is to generate association rules from large sets of data, making it incredibly useful in detecting patterns and uncovering hidden relationships between items. Think about it: have you ever noticed how websites can recommend products that complement each other, like suggesting a book based on your reading history or predicting the movie you’d want to watch next? All of these recommendations are made possible by the Apriori algorithm.

How Does the Apriori Algorithm Work?

Now that we’ve established what does Apriori algorithm do, let’s dive deeper into how it works. The Apriori algorithm follows a simple yet effective process to discover frequent itemsets in the provided data. It consists of two main steps:

1. Identifying Frequent Itemsets

First, the algorithm scans through the entire dataset to find the individual items that frequently occur together. It does this by setting a minimum support threshold, which is a user-defined parameter that indicates how frequently an itemset must occur to be considered “frequent.” The algorithm then iteratively identifies frequent itemsets containing two, three, four, and so on items, as long as they meet the minimum support requirement. This process continues until no more frequent itemsets can be found.

2. Generating Association Rules

Once the frequent itemsets are identified, the algorithm proceeds to generate association rules from them. These rules represent relationships between the items within the dataset, allowing for powerful data analysis, predictions, and recommendations. To do this, the algorithm sets another user-defined parameter called the minimum confidence threshold. This determines the strength of the relationship between items in a rule. Only the rules that satisfy the minimum confidence threshold are considered reliable and valid.

The result? A comprehensive list of association rules that highlight strong relationships between items, making it perfect for applications such as market basket analysis and personalized recommendation systems.

Applications: Where is the Apriori Algorithm Used?

Now that we’ve answered the question of what does Apriori algorithm do and how it operates, let’s explore some of its most prominent applications:

- E-commerce: The Apriori algorithm helps online retailers make personalized product recommendations based on the customers’ purchase histories and the co-occurrence of items in the transactions.

- Movie and Music Streaming: Platforms like Netflix and Spotify use the Apriori algorithm to suggest movies and songs that users might enjoy, based on their watching and listening habits.

- Healthcare: The algorithm can detect patterns in patient records, identifying potential risk factors and helping healthcare providers make proactive decisions in treatment plans.

- Social Media: By analyzing data such as posts, comments, and reactions, the Apriori algorithm helps social media platforms segment users into target groups for tailored advertising and content recommendations.

Conclusion: Decoding the Power of the Apriori Algorithm

In today’s rapidly evolving digital world, data is the key to unlocking new insights and enhancing user experiences. The Apriori algorithm stands as a testament to the incredible potential that lies within data mining and machine learning, making it an invaluable tool for organizations across various industries. So, the next time you encounter an eerily accurate recommendation or uncover an unexpected connection between items, remember this powerful algorithm that works silently behind the scenes, ever vigilant and always ready to reveal new patterns and relationships.

What exactly is an algorithm? Algorithms explained | BBC Ideas

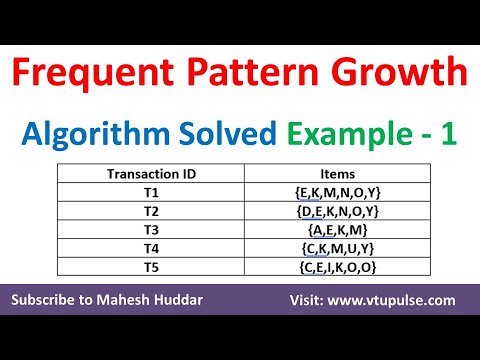

1. Frequent Pattern (FP) Growth Algorithm Association Rule Mining Solved Example by Mahesh Huddar

In data mining, how does the Apriori algorithm function?

The Apriori algorithm is a widely-used data mining technique for discovering frequent itemsets in large transactional databases. It plays a crucial role in the identification of association rules, which are useful for understanding relationships between items in the database.

The main idea behind the Apriori algorithm is to find those itemsets that occur frequently together across transactions. The algorithm functions based on the following key principles:

1. Support and Confidence: The algorithm relies on two important measures: support and confidence. Support refers to the frequency at which a particular itemset occurs in the dataset, while confidence indicates how often the relationship (i.e., association rule) holds true. Both of these parameters are user-defined and help prune the search space by eliminating infrequent itemsets.

2. Downward Closure Property: The Apriori algorithm exploits a property called the downward closure property or the Apriori property. It states that if an itemset is frequent, its subsets must also be frequent. Consequently, if an itemset is infrequent, all of its supersets will be infrequent as well. This property helps reduce the number of candidate itemsets that need to be analyzed.

The Apriori algorithm functions through these basic steps:

1. Initialization: Initially, it scans the entire dataset to identify frequent 1-itemsets (single items) that meet the minimum support threshold.

2. Iteration and Candidate Generation: Using the discovered frequent itemsets from the previous iteration, the Apriori algorithm generates new candidate k-itemsets (larger itemsets) by performing a join operation. This involves combining two frequent (k-1)-itemsets with a common prefix.

3. Pruning: The algorithm uses the downward closure property to prune candidates that have infrequent sub-itemsets, reducing the number of itemsets to be analyzed.

4. Frequency Evaluation: The remaining candidate itemsets are evaluated to calculate their support values. Those that satisfy the minimum support threshold are considered frequent and retained for subsequent iterations.

5. Termination: The process is repeated iteratively until no more frequent itemsets can be generated. Finally, association rules are derived from these frequent itemsets by calculating the confidence of each rule.

In summary, the Apriori algorithm functions by iteratively discovering frequent itemsets in a large transactional database and using them to generate association rules. Key to its efficiency is the use of support and confidence measures and the exploitation of the downward closure property.

Can you explain the Apriori algorithm in simple, everyday language?

The Apriori algorithm is a popular method used in the field of data mining, specifically for finding frequent itemsets in large datasets. Frequent itemsets are groups of items that frequently occur together in a given dataset.

Imagine you own a store and want to find out which products are often purchased together by customers. This information can help you make better decisions regarding product placement or creating special offers.

The Apriori algorithm works by following a simple principle: if an itemset is frequent, then all its subsets must be frequent as well. For example, if people often buy apples and oranges together, then it’s highly probable that apples and oranges each sell well individually too.

Here’s an overview of how the Apriori algorithm works:

1. Initially, the algorithm counts the individual items’ occurrences in the dataset (single-item frequency).

2. Next, it identifies the items that meet a predefined minimum frequency or support threshold. Items not meeting this threshold are eliminated.

3. The algorithm then forms pairs (or combinations) of the remaining items and calculates their frequency in the dataset.

4. Again, only the pairs that meet the minimum support threshold move forward. The process repeats, forming larger item sets and checking their frequencies until no more frequent itemsets can be found.

By following these steps, the Apriori algorithm efficiently identifies patterns and associations between items in a dataset, making it valuable for tasks like market basket analysis and recommendation systems.

How does the Apriori algorithm help in identifying frequent itemsets from a transactional database?

The Apriori algorithm is a popular data mining technique used to identify frequent itemsets in a transactional database and derive association rules from these itemsets. Frequent itemsets are sets of items that appear together in a significant number of transactions.

The Apriori algorithm works based on the principle that if an itemset is frequent, then all of its subsets must also be frequent. This property helps to reduce the search space and computational complexity. The algorithm follows an iterative approach, generating candidate itemsets and pruning infrequent itemsets at each step.

Here’s a high-level overview of how the Apriori algorithm helps in identifying frequent itemsets:

1. Initialization: Start with individual items as candidate itemsets. Scan the transactional database and count the occurrence of each item.

2. Prune infrequent itemsets: Remove itemsets that do not meet the specified minimum support threshold. The remaining itemsets are deemed frequent.

3. Generate candidate itemsets: For each iteration, create new candidate itemsets by combining the previously identified frequent itemsets. For example, if the previous iteration produced frequent itemsets {A}, {B}, and {C}, the next iteration would generate candidate itemsets {A, B}, {A, C}, and {B, C}.

4. Repeat steps 2 and 3: Continue iterating and generating larger candidate itemsets until no more candidates can be generated or all remaining itemsets are pruned.

5. Derive frequent itemsets: At the end of the process, the remaining itemsets are considered frequent and can be used for further analysis or to generate association rules.

By following this approach, the Apriori algorithm efficiently identifies frequent itemsets and reduces the computational complexity of mining large transactional databases.

What are the key steps involved in implementing the Apriori algorithm for association rule mining?

The Apriori algorithm is a widely-used method for association rule mining in large datasets. It aims to discover interesting relationships between items in a dataset, which can help in decision-making processes. Here are the key steps involved in implementing the Apriori algorithm:

1. Define the minimum support and confidence: These are the threshold values used to determine if an itemset or association rule is significant. Support refers to the frequency of an itemset in the dataset, while confidence measures the likelihood of an association rule being true.

2. Create the initial candidate itemsets: Itemsets are groups of items that occur together in the dataset. The first step is to create all possible single-item itemsets and calculate their support.

3. Filter itemsets based on minimum support: Remove any candidate itemsets that do not meet the minimum support threshold. This step is important to ensure the algorithm focuses on significant itemsets and reduces computational complexity.

4. Create higher-order candidate itemsets: Generate larger candidate itemsets by joining the itemsets that passed the minimum support threshold in the previous step. This is achieved by combining itemsets of size k-1 and checking if their elements have the same k-2 elements.

5. Repeat steps 3 and 4: Continue the process of generating candidate itemsets and filtering them based on the minimum support threshold until no more itemsets can be generated.

6. Generate association rules: For each frequent itemset, generate all possible subsets of the itemset. Then, create association rules by dividing the itemset into two parts, antecedent and consequent. Calculate the confidence of each rule and filter out those that do not meet the minimum confidence threshold.

7. Evaluate and analyze the results: Examine the output association rules and determine their usefulness in the given context. This could involve analyzing the discovered patterns, visualizing the relationships, or applying the rules to a decision-making process.

By following these steps, you can implement the Apriori algorithm for association rule mining and derive valuable insights from your dataset.

How does the Apriori algorithm efficiently reduce the search space for frequent patterns in large datasets?

The Apriori algorithm is a popular data mining technique used for efficiently discovering frequent patterns in large datasets. The main goal of the algorithm is to find the most common itemsets that appear together in a dataset. These patterns are particularly useful for various applications such as market basket analysis, recommendation systems, and association rule mining.

The Apriori algorithm reduces the search space for frequent patterns using a principle called the Apriori property. The property states that if an itemset is frequent, then all its subsets must also be frequent. Conversely, if an itemset is infrequent, then all its supersets will be infrequent as well. This property allows the algorithm to drastically reduce the number of candidate itemsets it needs to evaluate.

The Apriori algorithm employs a bottom-up approach and works in multiple iterations. In each iteration, it performs the following two steps:

1. Join step: Generate candidate itemsets by combining frequent itemsets from the previous iteration, ensuring they share all but one last item.

2. Prune step: Eliminate candidates that have infrequent subsets using the Apriori property, thus reducing the search space.

By iteratively applying these steps and exploiting the Apriori property, the algorithm can efficiently identify the frequent patterns in a large dataset, avoiding unnecessary evaluations of itemsets that have little chance of being frequent.

In summary, the Apriori algorithm efficiently reduces the search space for frequent patterns in large datasets by leveraging the Apriori property and employing a bottom-up approach with join and prune steps. This process enables the algorithm to focus on evaluating only the promising candidate itemsets, making it a powerful and widely used technique for discovering frequent patterns in data.