Welcome to my algorithm blog! In this article, we will dive into the fascinating world of unsupervised learning. Discover the key concepts and techniques that power this cutting-edge approach to machine learning. Let’s get started!

Unveiling the Potential of Unsupervised Learning Algorithms

Unveiling the Potential of Unsupervised Learning Algorithms in the context of algorithms involves understanding their core principles, applications, and benefits. Unsupervised learning is a type of machine learning where algorithms learn and identify patterns from unlabeled data, without any prior knowledge or training.

One of the primary advantages of unsupervised learning algorithms is their ability to discover hidden structures within datasets. This capability is essential in cases where obtaining labeled data is either difficult, expensive, or time-consuming.

There are several types of unsupervised learning algorithms, including:

1. Clustering algorithms: These algorithms group similar data points together based on their features, such as k-means, hierarchical clustering, and DBSCAN. Clustering is particularly useful when dealing with large datasets and has applications in areas like customer segmentation, anomaly detection, and image segmentation.

2. Dimensionality reduction algorithms: These algorithms reduce the number of features in a dataset while preserving its essential characteristics, which allows for more efficient processing and visualization. Principal Component Analysis (PCA) and t-Distributed Stochastic Neighbor Embedding (t-SNE) are examples of dimensionality reduction techniques. They find applications in tasks such as data compression, noise reduction, and feature extraction.

3. Association rule learning algorithms: These algorithms find relationships between variables in datasets, often used for market basket analysis and recommender systems. Examples include the Apriori algorithm and the Eclat algorithm.

4. Generative models: These algorithms learn the underlying probability distribution of the data to generate new, similar data points. Examples include Generative Adversarial Networks (GANs) and Variational Autoencoders (VAEs). These models have found applications in areas such as image synthesis, data augmentation, and natural language processing.

By unveiling the potential of unsupervised learning algorithms, researchers and practitioners can utilize them to solve complex problems in various domains. They serve as valuable tools for analyzing unstructured data, extracting meaningful insights, and enabling data-driven decision-making.

Can We Build an Artificial Hippocampus?

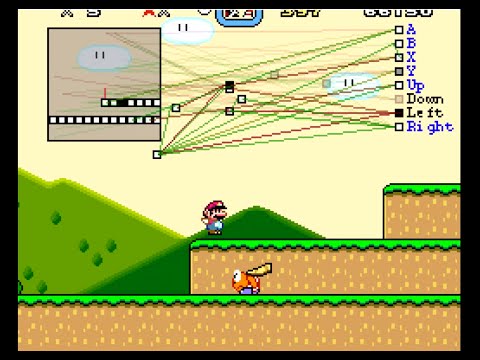

MarI/O – Machine Learning for Video Games

What algorithm is utilized for unsupervised learning?

There are several algorithms utilized for unsupervised learning in the context of machine learning and artificial intelligence. One popular algorithm is the K-means clustering algorithm. This algorithm aims to partition a set of observations into a specific number of clusters, where each observation belongs to the cluster with the nearest mean. Other unsupervised learning algorithms include hierarchical clustering, DBSCAN, Gaussian mixture models, and self-organizing maps.

What is the most suitable algorithm for unsupervised machine learning?

In the context of unsupervised machine learning, there is no single “most suitable” algorithm that fits all use cases. However, one of the most popular and widely-used algorithms for unsupervised learning is K-means clustering.

K-means clustering is a technique that aims to partition a set of data points into K clusters, where each data point belongs to the cluster with the nearest mean value. This algorithm works well for problems such as segmentation, anomaly detection, and pattern recognition.

Another notable unsupervised learning algorithm is Hierarchical clustering. It creates a tree-like structure of nested clusters by successively merging or splitting clusters based on a distance metric.

Other common algorithms include DBSCAN (Density-Based Spatial Clustering of Applications with Noise), which groups data points together based on density, and Principal Component Analysis (PCA), a dimensionality reduction technique that helps identify patterns within high-dimensional data.

In conclusion, the most suitable algorithm for unsupervised machine learning depends on the specific problem, dataset characteristics, and desired outcomes.

What are some examples of unsupervised algorithms?

Unsupervised algorithms are a type of machine learning algorithms that work with unlabelled data, allowing the model to learn patterns and structures within the data without guidance. Some examples of unsupervised algorithms include:

1. Clustering algorithms, which group similar data points together based on their characteristics, such as K-means, Hierarchical clustering, and DBSCAN.

2. Dimensionality Reduction algorithms, which transform high-dimensional data into lower-dimensional representations while preserving the underlying structure of the data. Examples include Principal Component Analysis (PCA), t-distributed Stochastic Neighbor Embedding (t-SNE), and Linear Discriminant Analysis (LDA).

3. Anomaly Detection algorithms, which identify unusual data points or outliers in the dataset that deviate from the norm, such as Isolation Forest, Local Outlier Factor (LOF), and One-class Support Vector Machines (SVM).

4. Association Rule Learning algorithms, which discover relationships between variables in large datasets, often used for market basket analysis. Examples include Apriori, Eclat, and FP-growth algorithms.

5. Neural Networks for unsupervised learning, such as Self-Organizing Maps (SOM) and Autoencoders, which can learn latent representations of the input data and help discover patterns or features that might not be immediately apparent otherwise.

In summary, unsupervised algorithms include techniques such as clustering, dimensionality reduction, anomaly detection, association rule learning, and specific types of neural networks. These algorithms allow machines to learn patterns and structures within unlabelled datasets, providing valuable insights and understanding without explicit guidance.

What are the key differences between supervised and unsupervised learning algorithms, and when should one choose unsupervised methods over supervised ones?

In the context of algorithms, the key differences between supervised and unsupervised learning algorithms can be summarized as follows:

1. Learning Approach: Supervised learning algorithms learn from a labeled dataset, where each input data point is associated with an output label or target variable. On the other hand, unsupervised learning algorithms deal with an unlabeled dataset, where no such input-output relationship is provided.

2. Goal: The primary goal of supervised learning is to build a model that predicts or classifies target variables based on input features, whereas the main objective of unsupervised learning is to discover patterns, groupings, or relationships within the data.

3. Applications: Supervised learning algorithms are generally used for tasks like classification and regression, while unsupervised learning methods are utilized for clustering, dimensionality reduction, and anomaly detection.

One should choose unsupervised methods over supervised ones in the following scenarios:

1. Lack of labeled data: Unsupervised learning methods are well-suited for situations where there is a lack of labeled data or ground truth information, making it difficult to train supervised models.

2. Data exploration and pattern discovery: When the goal is to understand the underlying structure of the data or uncover hidden patterns, unsupervised learning techniques such as clustering and dimensionality reduction prove highly valuable.

3. Anomaly detection: In cases where one needs to identify unusual instances or outliers within the data, unsupervised learning methods can be employed effectively.

4. Feature extraction: Unsupervised learning methods can help to extract meaningful features from complex datasets, which can then be used as inputs for supervised learning algorithms to improve their performance.

In conclusion, the choice between supervised and unsupervised learning algorithms depends on the availability of labeled data, the overall objective, and specific use-cases. Additionally, both techniques can be combined in semi-supervised learning or transfer learning scenarios to leverage the strengths of each approach.

Which are the most popular unsupervised learning algorithms used in various fields, and what unique qualities do they have to offer?

The most popular unsupervised learning algorithms used in various fields include:

1. K-Means Clustering: This algorithm is used to partition a dataset into K clusters based on similarity. It does this by iteratively updating the centroids of the clusters until convergence. The unique quality of K-Means clustering is its simplicity, which makes it easy to implement and efficient to run.

2. DBSCAN (Density-Based Spatial Clustering of Applications with Noise): DBSCAN identifies clusters of data points based on their density. It can identify clusters of varying shapes and sizes and is effective at detecting noise. The algorithm’s ability to handle noise and identify clusters with varying densities sets it apart from others.

3. Hierarchical Clustering: Hierarchical clustering algorithms build a tree-like structure to represent nested clusters. There are two primary approaches: agglomerative (bottom-up) and divisive (top-down). The dendrogram produced by hierarchical clustering allows for easy interpretation of the clustered data and determination of the optimal number of clusters.

4. t-Distributed Stochastic Neighbor Embedding (t-SNE): t-SNE is widely used for visualizing high-dimensional data in a lower-dimensional space. It uses the concept of probability distributions for representing similarities between data points. The algorithm is particularly useful for visualizing complex datasets with large numbers of features, allowing researchers to gain insights and patterns from the data.

5. Principal Component Analysis (PCA): PCA is a dimensionality reduction technique that transforms data into a new coordinate system such that the first coordinate (the principal component) accounts for the most variance in the data. PCA helps reduce computational complexity and noise in the data while preserving the essential structure and patterns.

6. Independent Component Analysis (ICA): Similar to PCA, ICA is another dimensionality reduction technique that seeks to transform data while preserving as much information as possible. However, ICA distinguishes itself by focusing on finding statistically independent components rather than maximizing variance like PCA.

7. Autoencoders: Autoencoders are artificial neural networks used for unsupervised feature learning and dimensionality reduction. They consist of an encoder, which maps the input data to a lower-dimensional representation, and a decoder, which reconstructs the original input from the compressed representation. Autoencoders can be useful for tasks such as anomaly detection, denoising, and feature extraction.

These unsupervised learning algorithms offer unique qualities that make them suitable for various applications in diverse fields such as finance, healthcare, marketing, and many more.

How can one determine the optimal parameters for an unsupervised learning algorithm to ensure the best possible performance on a particular dataset?

In order to determine the optimal parameters for an unsupervised learning algorithm to ensure the best possible performance on a particular dataset, one should follow these key steps:

1. Data exploration and preprocessing: Begin by exploring the dataset and preprocessing it to remove any noise or irrelevant data. This may involve scaling, normalizing, or transforming data to make it suitable for the unsupervised learning algorithm.

2. Algorithm selection: Choose an appropriate unsupervised learning algorithm based on your specific problem, dataset size, and desired outcomes. Examples of unsupervised learning algorithms include clustering (e.g., K-means, hierarchical clustering), dimensionality reduction (e.g., PCA, t-SNE), and association rule mining (e.g., Apriori, Eclat).

3. Hyperparameter tuning: Experiment with different hyperparameter values to find the best combination for your specific algorithm. Some common hyperparameters in unsupervised learning algorithms include the number of clusters, distance metrics, and learning rates. You can use techniques such as grid search or random search to systematically explore different hyperparameter combinations.

4. Evaluation metrics: Since unsupervised learning doesn’t have labeled data or ground truth, evaluating the performance is more challenging than in supervised learning. Nevertheless, it’s crucial to establish evaluation metrics, such as within-cluster sum of squares (WCSS) for clustering, explained variance for dimensionality reduction, or lift for association rule mining. Make sure to choose evaluation metrics that are relevant to your specific problem and dataset.

5. Assessing model performance: Analyze the performance of the unsupervised learning algorithm using the chosen evaluation metrics. You may need to iteratively tune the hyperparameters and re-evaluate the model until you achieve satisfactory performance.

6. Validation: Validate the results of the unsupervised learning algorithm through visualization, domain knowledge, or by comparing the clustering, dimensionality reduction, or association rules with known patterns in the data.

7. Iterative refinement: Continuously refine the algorithm, hyperparameter values, and preprocessing steps based on the performance and validation results to optimize the model further.

By following these steps, you can determine the optimal parameters for your unsupervised learning algorithm and ensure the best possible performance on your specific dataset.