Welcome to my algorithms blog! In this article, we’ll explore the fascinating world of multiplication algorithms and learn which ones are best for different scenarios. Join me as we uncover the secrets of efficient number-crunching!

Choosing the Ideal Multiplication Algorithm: Boosting Efficiency and Speed in Calculations

When it comes to choosing the ideal multiplication algorithm, there are several factors to consider in order to boost efficiency and speed in calculations. Multiplication is a fundamental arithmetic operation that impacts various areas, including computer science and mathematics. Therefore, selecting the right algorithm is crucial for obtaining accurate and efficient results.

The traditional multiplication algorithm, which most people learn in school, is known as long multiplication. While this method works for small numbers, it can become tedious and time-consuming when dealing with larger numbers or more complex calculations.

For better performance and increased efficiency, more advanced multiplication algorithms have been developed, including the following:

1. Karatsuba algorithm: This is a fast multiplication algorithm that utilizes a divide-and-conquer approach. It requires fewer multiplications than the traditional method, resulting in improved calculation speed.

2. Toom-Cook algorithm: Also known as Toom-3, this method is a generalization of the Karatsuba algorithm. It is designed for multiplying large numbers by dividing them into smaller parts and handling them separately, which reduces the number of required calculations.

3. Schonhage-Strassen algorithm: This algorithm applies Fast Fourier Transforms (FFT) for efficient multiplication of large integers. It is particularly useful for handling numbers with thousands of digits.

4. Booth’s multiplication algorithm: This method is specifically designed for binary multiplication, which is essential in digital computing. It focuses on reducing the number of additions and subtractions required while performing a multiplication operation.

When evaluating different multiplication algorithms, consider factors such as the size and nature of the numbers involved, the computational resources available, and the desired level of accuracy. Ultimately, the ideal multiplication algorithm will provide a balance between speed, efficiency, and accuracy, ensuring optimal performance and reliable results.

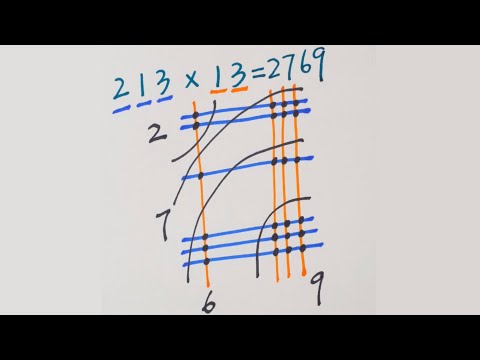

Japanese Multiplication – Using Lines

What Is An Algorithm? | What Exactly Is Algorithm? | Algorithm Basics Explained | Simplilearn

What is the most efficient multiplication algorithm?

The most efficient multiplication algorithm depends on the size of the numbers being multiplied. For small numbers, the traditional grade-school multiplication method is quite efficient, but as the numbers grow larger, other algorithms like the Karatsuba algorithm, Toom-Cook algorithm, and Schönhage-Strassen algorithm become more efficient.

For extremely large numbers, such as those used in cryptography, the Fast Fourier Transform (FFT) based algorithms like Schönhage-Strassen and Number Theoretic Transform (NTT) are considered the most efficient. However, for practical purposes, it’s essential to consider the specific use case and performance trade-offs when choosing a multiplication algorithm.

What are the stages involved in the multiplication algorithm?

The multiplication algorithm involves several stages that are carried out to perform the multiplication of two numbers. In the context of algorithms, the most important stages involved in the multiplication algorithm are:

1. Initialization: Set the initial value of the product to zero.

2. Decomposition: Break down each of the multiplicands (the numbers being multiplied) into its individual digits.

3. Multiplication of partial products: Multiply each digit of the first number by each digit of the second number, starting with the least significant digit. This will result in a series of partial products.

4. Handling carries: If the multiplication of two digits results in a number with more than one digit, carry the most significant digit(s) over to the next multiplication step.

5. Summation: Add up all the partial products, taking into account any carries from the previous step, to obtain the final product.

6. Output: Display or store the final product as the result of the multiplication algorithm.

In summary, the multiplication algorithm consists of initialization, decomposition, multiplication of partial products, handling carries, summation, and output stages. These stages ensure that the multiplication of two numbers is carried out effectively and accurately.

What is the most renowned algorithm for matrix multiplication?

The most renowned algorithm for matrix multiplication is the Strassen’s Algorithm. In the context of algorithms, Strassen’s Algorithm is an efficient method for multiplying two matrices, reducing the complexity from the traditional O(n^3) to approximately O(n^2.81). This makes it a significant improvement over the standard approach, especially for large matrices.

What are the most efficient algorithms for large integer multiplication?

In the context of algorithms, the most efficient algorithms for large integer multiplication are:

1. Karatsuba Algorithm – This algorithm uses a divide and conquer strategy to multiply two n-digit integers in a more efficient manner than the traditional method. It has a time complexity of O(n^log2(3)), which is faster than the O(n^2) of the standard long multiplication technique.

2. Toom-Cook Multiplication – Also known as Toom-3, this method generalizes the Karatsuba algorithm by splitting the input numbers into more pieces. It reduces the number of multiplications required and offers improved time complexity, especially for larger integers.

3. Schonhage-Strassen Algorithm – This algorithm is based on Fast Fourier Transform (FFT) and is particularly suitable for very large integers. It has a time complexity of O(n * log(n) * log(log(n))) and is faster than both Karatsuba and Toom-Cook for sufficiently large inputs.

4. Fürer’s Algorithm – An improvement over Schonhage-Strassen algorithm, it holds the record for the fastest known multiplication algorithm for large integers. Its time complexity is O(n * log(n) * 2^(O(log*(n)))), where log* is the iterated logarithm function.

5. Barrett Reduction – While not a direct multiplication algorithm, Barrett reduction is used to optimize modular multiplication involving large integers, which is a common operation in cryptographic applications.

Choosing the most efficient algorithm depends on the size of the integers being multiplied and the computing resources available. For small to moderately large integers, Karatsuba and Toom-Cook algorithms are often preferred. For very large integers, Schonhage-Strassen, Fürer’s algorithm, or even newer algorithms like Harvey–Van Der Hoeven may be more suitable.

How does the Karatsuba algorithm improve upon traditional multiplication methods?

The Karatsuba algorithm is a fast multiplication method that improves upon traditional multiplication techniques, such as long multiplication or the grade-school method. The key benefits of the Karatsuba algorithm lie in its divide-and-conquer strategy and its reduction of basic multiplication operations.

In traditional multiplication methods, the number of multiplication steps grows quadratically with the number of digits in the operands. For example, when multiplying two n-digit numbers using the long multiplication method, we have to perform n² basic multiplications.

The Karatsuba algorithm, on the other hand, reduces the number of basic multiplications required. It does so by breaking down the original numbers into smaller parts, recursively applying the algorithm, and then combining the results. This divide-and-conquer approach allows the Karatsuba algorithm to perform only three basic multiplications for two 2-digit numbers, instead of the four required by traditional methods.

As a result, the overall complexity of the Karatsuba algorithm is O(n^log2(3)), which is significantly faster than the quadratic complexity of traditional multiplication methods (O(n²)). This improvement becomes more pronounced as the size of the input numbers increases, making the Karatsuba algorithm particularly suitable for large number multiplications, such as those encountered in cryptography and computer algebra systems.

Can you compare and contrast the performance of Strassen’s algorithm and Schonhage-Strassen algorithm in matrix multiplication?

Strassen’s algorithm and Schonhage-Strassen algorithm are both advanced algorithms used for different purposes. Strassen’s algorithm is used to perform matrix multiplication more efficiently, while the Schonhage-Strassen algorithm is designed for fast integer multiplication.

Strassen’s Algorithm

Strassen’s algorithm is an improvement over the standard matrix multiplication technique for multiplying two square matrices of the same dimension. It is based on the principle of divide-and-conquer, reducing the number of required multiplications from n^3 to approximately n^2.81 (using the matrix multiplication exponent, ω ≈ 2.81). This makes it more efficient than the traditional approach, especially for large matrices. However, its actual performance gain can vary depending on factors such as the size and sparsity of the input matrices, as well as the efficiency of the underlying hardware and software implementations.

Schonhage-Strassen Algorithm

The Schonhage-Strassen algorithm is a fast integer multiplication algorithm that employs Fast Fourier Transform (FFT) techniques to multiply large integers more efficiently than the grade-school method. It reduces the complexity of integer multiplication from O(n^2) to O(n*log(n)*log(log(n))) for n-bit integers, providing significant speedup for very large numbers. However, this algorithm is not directly applicable to matrix multiplication and becomes less effective for smaller integers due to the increased overhead introduced by the FFT.

In conclusion, while both Strassen’s algorithm and the Schonhage-Strassen algorithm offer improvements over their respective standard techniques, they cannot be directly compared with each other in the context of matrix multiplication, as they target different computational problems. Strassen’s algorithm is specifically tailored to optimize matrix multiplication, while the Schonhage-Strassen algorithm addresses the problem of fast integer multiplication.