Title: Is Genetic Algorithm Reinforcement Learning the Future of Artificial Intelligence?

Introduction:

Imagine a world where computers could learn and develop just like humans. A world where artificial intelligence (AI) algorithms can replicate human decision-making processes, creating a more efficient and effective future. This dream might be closer than you think, all thanks to the incredible advancements in genetic algorithms and reinforcement learning.

In this article, we will explore what genetic algorithms and reinforcement learning are and how they work together to create the ultimate AI experience. By reading this post, you will unlock important insights into these revolutionary technologies and answer the question: is genetic algorithm reinforcement learning the future of AI?

What are Genetic Algorithms?

Genetic algorithms (GAs) are a type of optimization algorithm that mimics the process of natural evolution, inspired by Charles Darwin’s theory of natural selection. These algorithms use principles such as mutation, crossover (recombination), and selection to iteratively search for the optimal solution to a problem. The main idea behind GAs is that a population of possible solutions evolves over time by combining the most promising individuals in each generation.

What is Reinforcement Learning?

Reinforcement Learning (RL) is a type of machine learning where an agent learns to make decisions by interacting with its environment. The agent receives feedback in the form of rewards or penalties, which it uses to refine its decision-making process. Over time, the agent learns to choose the actions that maximize its cumulative reward, essentially learning the best strategy to achieve its goal.

Is Genetic Algorithm Reinforcement Learning possible?

When we combine GAs and RL, we get a powerful optimization approach known as Genetic Algorithm Reinforcement Learning (GARL). This combination leverages the strengths of both techniques – the ability to search and optimize complex spaces using GAs and the dynamic decision-making capabilities of RL.

GARL works by encoding the behavior of a reinforcement learning agent as a “chromosome,” a data structure that represents a potential solution in GAs. The chromosomes then evolve, guided by a fitness function that measures the performance of the RL agent in the task at hand. The process of evolution refines the policy used by the RL agent, improving its ability to achieve the goal.

Benefits of Genetic Algorithm Reinforcement Learning

GARL has some key benefits over traditional reinforcement learning techniques:

1. Exploration-Exploitation Balance: One of the challenges in reinforcement learning is balancing the exploration of new actions and exploiting the known best actions. GARL provides an efficient balance between exploration and exploitation by evolving multiple solutions in parallel, ensuring a diverse set of strategies are considered.

2. Global Optimization: Genetic algorithms have a strong ability to find global optima in complex search spaces, making it more likely for GARL to find the optimal policy than traditional gradient-based RL methods, which might get trapped in local optima.

3. Scalability: GARL is highly parallelizable, meaning it can take advantage of modern multi-core processors or graphics processing units (GPUs) to speed up the learning process.

4. Transfer Learning: GAs facilitate the transfer of knowledge from one problem to another, allowing the development of general-purpose AI agents that can quickly adapt to new tasks.

Applications of Genetic Algorithm Reinforcement Learning

GARL has been successfully applied in various fields, including:

1. Robotics: Robots can use GARL to learn complex motor skills, adapt to different environments, or cooperate with other robots in a team.

2. Game AI: Video game developers have used GARL to create intelligent game characters that can adapt their strategies to the player’s behavior.

3. Autonomous Vehicles: Self-driving cars can benefit from GARL to learn optimal driving strategies in various traffic conditions and environments.

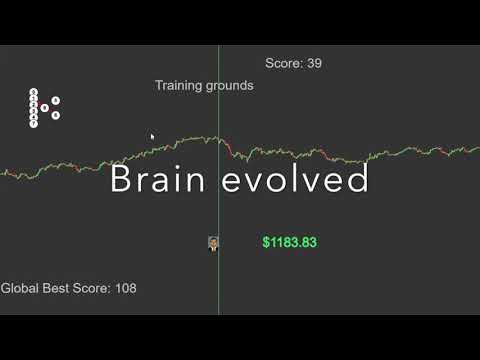

4. Financial Trading: GARL can be used to develop intelligent trading algorithms that learn to adapt to changing market conditions.

Conclusion

In summary, genetic algorithm reinforcement learning offers an exciting and promising approach for developing more intelligent and adaptable AI systems. By combining the strengths of genetic algorithms and reinforcement learning, GARL provides a robust framework for optimizing complex decision-making processes in various domains.

As we continue to push the boundaries of AI research, the integration of genetic algorithms and reinforcement learning may pave the way for artificial intelligence that rivals human intelligence. It is safe to say that the future of AI looks bright, and GARL will undoubtedly play an essential role in taking us there.

This AI Learned Boxing…With Serious Knockout Power! 🥊

Machine Learning vs. the Forex Market

Can genetic algorithms be considered a form of reinforcement learning?

In the context of algorithms, genetic algorithms and reinforcement learning are both techniques used for optimization and problem-solving. However, they are fundamentally different in their approaches.

Genetic algorithms are a type of evolutionary algorithm inspired by natural selection and genetics. They involve a population of candidate solutions, which goes through the process of selection, crossover (recombination), and mutation to evolve into more optimal solutions over time. The main idea is to explore the search space using the principles of evolution, i.e., survival of the fittest.

On the other hand, reinforcement learning is an approach in machine learning where an agent learns to make decisions by interacting with its environment. The agent receives feedback in the form of rewards or penalties, and it aims to maximize the cumulative reward by developing a policy that determines the best actions to take in different situations. Reinforcement learning can adapt and improve the decision-making process over time based on the received feedback.

While they share some similarities, like being adaptive and aiming to find optimal solutions, it is not accurate to consider genetic algorithms as a form of reinforcement learning. They have different methodologies, and their underlying principles are derived from separate fields (evolutionary computation for genetic algorithms and machine learning for reinforcement learning).

In summary, genetic algorithms and reinforcement learning are distinct techniques for optimization and problem-solving but should not be considered as the same or a form of one another.

What kind of learning does the genetic algorithm represent?

The genetic algorithm represents a type of evolutionary computing and machine learning, inspired by the process of natural selection. This approach is used to find approximate solutions to optimization and search problems. In the context of algorithms, it involves evolving a population of candidate solutions to improve their fitness with respect to a given objective function, iteratively applying crossover, mutation, and selection operations.

What algorithm can be utilized for reinforcement learning?

One of the most widely used algorithms for reinforcement learning is the Q-Learning algorithm. This algorithm is a model-free, value-based method that is particularly effective in finding an optimal action-selection policy for any given finite Markov Decision Process (MDP).

At its core, Q-Learning operates by estimating the action-value function, which represents the expected future rewards for all possible actions in each state. The algorithm updates the estimates iteratively, using the well-known Bellman equation.

Advantages of Q-Learning include its ability to handle problems with stochastic transitions and rewards, and its convergence to the optimal action-values under certain conditions.

In recent years, Deep Q-Networks (DQN) have been introduced, combining Q-Learning with deep neural networks. This enables tackling more complex problems and learning better feature representations from raw input data.

Does the genetic algorithm fall under supervised learning?

The genetic algorithm does not fall under supervised learning. It is a type of evolutionary algorithm inspired by the process of natural selection. Genetic algorithms are used for optimization, search problems, and machine learning, but they operate differently from supervised learning methods.

How do genetic algorithms and reinforcement learning techniques compare in terms of efficiency and applicability in solving complex optimization problems?

Genetic algorithms and reinforcement learning are two powerful techniques used in solving complex optimization problems. Each method has its own strengths and weaknesses, and their efficiency and applicability depend on the specific problem being tackled.

Genetic Algorithms (GA): Genetic algorithms are inspired by the process of natural selection and evolution. They work by using a population-based search through generations to optimize the solution for the problem at hand. The main steps in GA are selection, crossover (recombination), mutation, and sometimes elitism.

The efficiency of genetic algorithms often depends on the proper selection of parameters like population size, mutation rate, and crossover rate. Proper tuning of these parameters can lead to efficient convergence to the optimal solution.

The applicability of genetic algorithms is quite broad, as they can be used to solve a wide range of optimization problems, such as function optimization, machine learning model parameter optimization, feature selection, scheduling, and more. However, they may have difficulty handling problems with dynamic environments due to their lack of adaptability.

Reinforcement Learning (RL): Reinforcement learning is a type of machine learning technique that trains an agent to interact with an environment and make decisions to achieve a specific goal by maximizing cumulative rewards. The learning process involves trial and error, exploration, and exploitation to improve the agent’s performance.

The efficiency of reinforcement learning typically depends on the environment’s complexity and the available data. RL can converge faster than genetic algorithms if the problem can be modeled as a Markov Decision Process and has an optimal state value function. However, it can suffer from slow convergence in large state-action spaces or when dealing with sparse rewards.

The applicability of reinforcement learning is generally seen in problems involving decision-making and control, such as robotics, game playing, resource allocation, and recommendation systems. Its applicability can be limited in scenarios where defining a reward function or modeling the environment is challenging.

In summary, the efficiency and applicability of genetic algorithms and reinforcement learning techniques depend on the specific optimization problem being addressed. While genetic algorithms can be applied to a broader range of problems, reinforcement learning excels in decision-making and control tasks, especially when a well-defined reward function and environment model are available.

Can genetic algorithms be integrated with reinforcement learning methods to enhance convergence rates and solution quality in dynamic environments?

Yes, genetic algorithms can be integrated with reinforcement learning methods to enhance convergence rates and solution quality in dynamic environments. This combination is known as evolutionary reinforcement learning (ERL) or genetic-based reinforcement learning.

In ERL, genetic algorithms help explore the solution space more effectively by maintaining a diverse population of candidate solutions. Meanwhile, reinforcement learning provides a framework for learning optimal strategies based on trial-and-error interactions with the environment. By leveraging the strengths of both methods, ERL can potentially find better solutions faster than either method alone.

Integrating genetic algorithms with reinforcement learning can lead to improvements in several aspects:

1. Enhanced exploration and exploitation: The population-based nature of genetic algorithms promotes exploration of various solutions, while reinforcement learning drives the algorithm towards exploiting the best solutions.

2. Fast convergence: Genetic algorithms’ ability to search across multiple points in the solution space can potentially speed up the convergence rate of reinforcement learning methods when combined.

3. Adaptability to dynamic environments: The combination can help develop adaptable solutions that consider changing conditions in the environment, enabling robust performance even when faced with new challenges.

4. Better optimization: Integrating genetic algorithms with reinforcement learning can lead to improved optimization of solutions, combining the global search capabilities of genetic algorithms with the local search of reinforcement learning.

5. Scalability: ERL can potentially handle larger and more complex problems by benefiting from the parallel search capabilities of genetic algorithms and the adaptability of reinforcement learning.

It is important to note that the effectiveness of this integration may depend on the specific problem being tackled and the choice of hyperparameters for both genetic algorithms and reinforcement learning. However, there is strong evidence suggesting that combining genetic algorithms and reinforcement learning can offer significant advantages in solving complex optimization problems in dynamic environments.

What are the key differences between the exploration and exploitation strategies used in genetic algorithms versus those used in reinforcement learning algorithms?

In the context of algorithms, the key differences between the exploration and exploitation strategies used in genetic algorithms versus those used in reinforcement learning algorithms are as follows:

1. Algorithm nature: Genetic algorithms are inspired by the process of natural selection, where the fittest individuals are selected for reproduction to produce offspring for the next generation. Reinforcement learning algorithms, on the other hand, focus on learning through interaction with an environment in order to maximize some notion of cumulative reward.

2. Exploration and Exploitation balance: In genetic algorithms, exploration is achieved by the mutation and crossover operations, which allow for random changes in the genes of individuals, promoting diversity in the population. Exploitation is accomplished by the selection operation, which favors the fittest individuals to pass their genes to the next generation. On the other side, reinforcement learning algorithms use a combination of exploration and exploitation in order to learn an optimal policy. Exploration involves taking random actions to discover unvisited states, while exploitation consists of choosing greedy actions based on the current knowledge of the value function.

3. Representation of solutions: Genetic algorithms work with a population of candidate solutions encoded in a specific representation (e.g., binary strings), while reinforcement learning algorithms typically focus on single-agent scenarios that interact with the environment, updating their value functions or policies based on the acquired experiences.

4. Learning methods: Genetic algorithms use evolutionary mechanisms such as selection, crossover, and mutation to evolve the population towards better solutions. In reinforcement learning, learning methods can be mainly classified as model-based or model-free, where the agent learns an explicit model of the environment or learns directly from the interaction with the environment, respectively.

In conclusion, exploration and exploitation strategies differ significantly between genetic algorithms and reinforcement learning algorithms. The former relies on evolutionary operations to balance exploration and exploitation, while the latter uses random actions and greedy actions depending on the specific reinforcement learning technique employed.